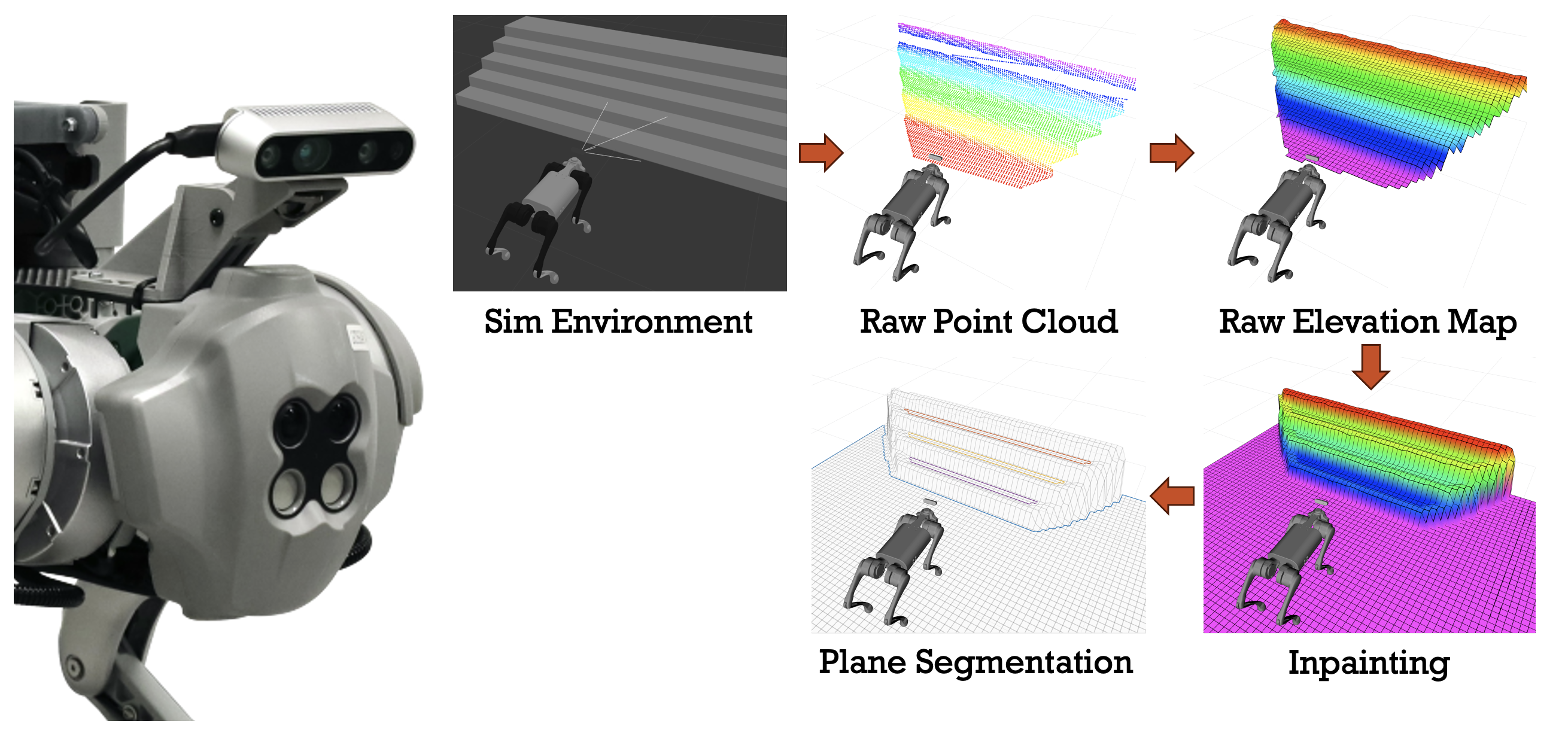

Perceptive locomotion is an advanced robotic capability that integrates perception and locomotion to allow robots to navigate complex environments safely and efficiently. In our project, we enhanced the Unitree Go1 robot with perceptive locomotion capabilities by mounting a RealSense D435i depth camera to the front of the robot. This camera produces point cloud data, which is analyzed to compute step-able areas in the environment.

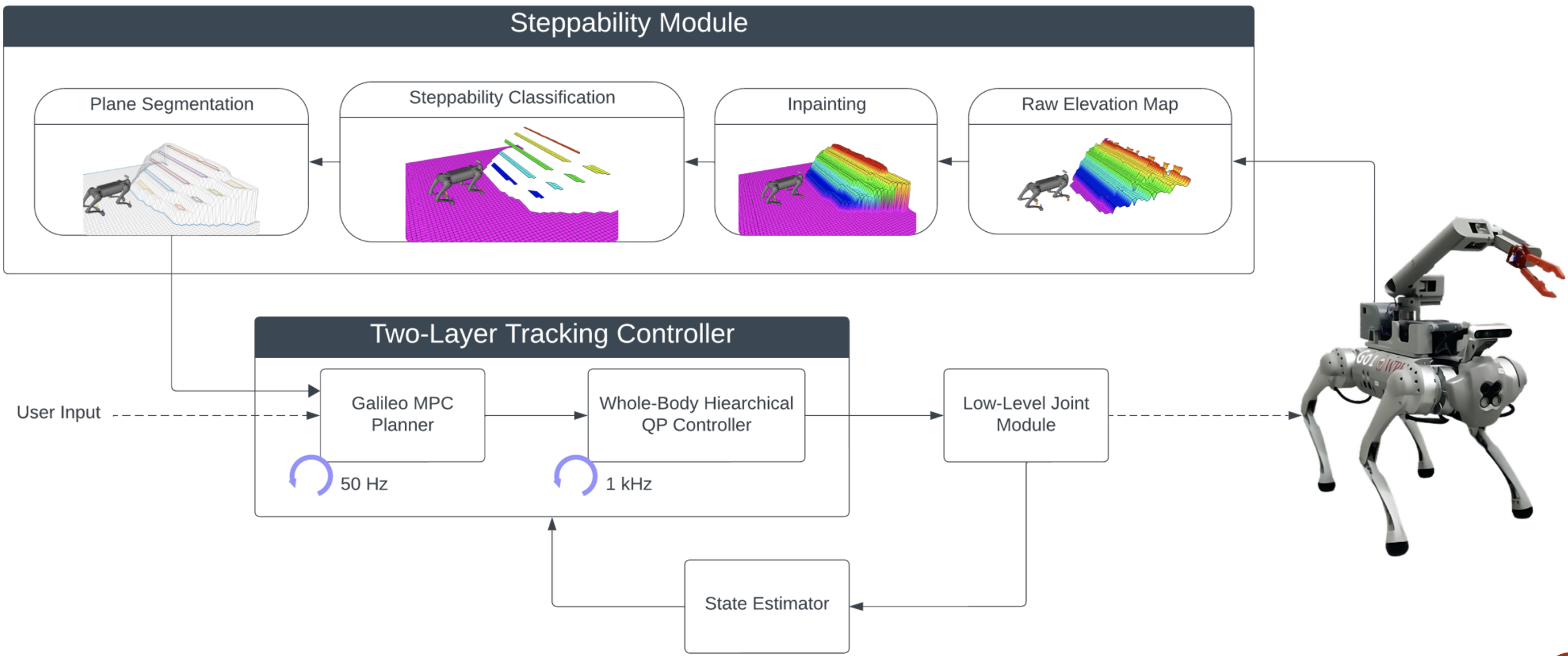

The perception system uses the RealSense D435i depth camera to capture depth information and generate 3D point clouds. This data is processed to identify step-able regions and construct a 3D map of the terrain. The control system, featuring Model Predictive Control (MPC) and Whole-Body Control (WBC), plans and executes safe trajectories in real-time. Integration and testing were conducted initially in a simulation environment using ROS Neotic and Gazebo, followed by deployment on the physical robot.

Perceptive locomotion significantly enhances the safety and efficiency of robotic movements in complex environments. This capability is crucial for various applications, including logistics, construction, and planetary exploration. Challenges such as map drifting due to the limited field of view of a single camera can be mitigated by adding more cameras around the robot’s body. Future work involves integrating the perception system with the Galileo motion planning solver to improve computational efficiency and real-time responsiveness.